TreeNet Gradient Boosting

Introduction

TreeNet Gradient Boosting is a revolutionary advance in Data Mining technology developed by Jerome Friedman, one of the world’s outstanding data mining researchers. TreeNet Gradient Boosting offers exceptional accuracy, blazing speed, and a high degree of fault tolerance for messy and incomplete data. It can handle both classification and regression problems and is remarkably effective in traditional numeric data mining and text mining. TreeNet Gradient Boosting can deal with substantial data quality issues, decide how a model should be constructed, select variables, detect interactions, address missing values, ignore suspicious data values, resist any overfitting and so forth. In addition, TreeNet Gradient Boosting adds the advantage of a degree of accuracy usually not attainable by a single model or by ensembles such as bagging or conventional boosting. It has been tested in a broad range of industrial and research settings and has demonstrated considerable benefits. Applications are tests in text mining, fraud detection, and creditworthiness have shown TreeNet to be dramatically more accurate on test data than other competing methods.

What is TreeNet Gradient Boosting?

TreeNet Gradient Boosting is one of the most powerful techniques for building predictive models. It is also the most flexible and powerful data mining tool, capable of consistently generating extremely accurate models. The TreeNet modelling engine’s level of accuracy is usually not attainable by single models or by ensembles such as bagging or conventional boosting. Most importantly, CART is the foundation of TreeNet gradient boosting and applications.

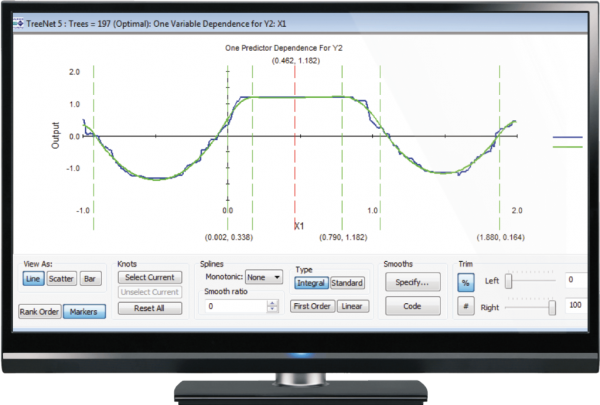

A TreeNet model normally consists of several hundred to several thousand small trees, each typically containing about six terminal nodes and each tree contributes a small portion to the overall model. And the final model prediction is the sum of all the individual tree contributions. TreeNet offers detailed self-testing to document its reliability on your own data. We do not need to concern with that complexity because all results, performance measures, and explanatory graphs can be understood by anyone familiar with basic data mining principles.

Key Features

- Automatic selection from thousands of predictors. No prior variable selection is required.

- Ability to handle data without pre-processing. Data don’t need to be rescaled, transformed, or modified in any way.

- Resistance to outliers in predictors or the target variable.

- Automatic handling of missing values.

- General robustness to dirty and partially inaccurate data.

- TreeNet is able to focus on the data that are not as easily predictable as the model evolves.

TreeNet Gradient Boosting in Salford Predictive Modeler

Salford Predictive Modeler is an integrated suite of Machine learning and Predictive Analytics Software. It includes various data mining techniques like classification, clustering, association and prediction. Some of the other methods are regression, survival analysis, missing value analysis, data binning and many more. SPM is a highly accurate and ultra-fast platform for developing predictive, descriptive, and analytical models from databases and datasets of any size, complexity, or organisation. The Salford Predictive Modeler software suite includes CART, MARS, TreeNet, Random Forests, as well as powerful new automation and modelling capabilities not found elsewhere.

TreeNet is a proprietary methodology developed in 1997 by Stanford’s Jerome Friedman, a co-author of CART and the author of MARS. TreeNet implements the gradient boosting algorithm and was originally written by Friedman himself, whose code and proprietary implementation details are exclusively licenced to Salford Systems.

The TreeNet modelling engine adds the advantage of a degree of accuracy usually not attainable by a single model or by ensembles such as bagging or conventional boosting. As opposed to Neural Networks, the TreeNet methodology is not sensitive to data errors and needs no time-consuming data preparation, pre-processing or imputation of missing values. This type of data error can be very challenging for conventional data mining methods and will be catastrophic for conventional boosting. In contrast, the TreeNet model is generally immune to such errors as it dynamically rejects training data points too much at variance with the existing model. The TreeNet modelling engine robustness extends to data contaminated with erroneous target labels.

TreeNet Gradient Boosting in Salford Predictive Modeler

Why use TreeNet Gradient Boosting?

TreeNet Gradient Boosting is the most flexible and powerful data mining tool, capable of consistently generating extremely accurate models.

It is not overly sensitive to data errors and needs no time-consuming data preparation, pre-processing, or imputation of missing values.

TreeNet Gradient Boosting is especially adept at dealing with errors in the target variable, a type of data error that could be catastrophic for a neural net.

It is resistant to overtraining and can be 10 to 100 times faster than a neural net. Finally, TreeNet is not troubled by hundreds or thousands of predictors.

We conduct various training programs – Statistical Training and Minitab Software Training. Some of the Statistical training certified courses are Predictive Analytics Masterclass, Essential Statistics For Business Analytics, SPC Masterclass, DOE Masterclass, etc. (Basic to Advanced Level). Some of the Minitab software training certified courses are Minitab Essentials, Statistical Tools for Pharmaceuticals, Statistical Quality Analysis & Factorial Designs, etc. (Basic to Advanced Level).

We also provide a wide range of Analytics Solutions like Business Analytics, Digital Process Automation, Enterprise Information Management, Enterprise Decisions Management and Business Consulting Services for Organisations to enhance their decision support systems.