Design of Experiments (DOE) | Experimental Design

Introduction

Design of experiment or Experimental Design plays an important role in process and product realization activities which consist of new product design and formulation, manufacturing process development and process improvement. All processes used in the activities found in manufacturing organisations are subject to variation. The process is a combination of operations, machines, methods, people and other resources that transform some input into an output. The sources of variation are due to combinations of materials, equipment, method and environmental conditions. All of these occurred naturally and must be accommodated in the process design if the desired output characteristics are to be obtained reliably.

DOE Applications

Design of Experiments

(For Research Scientists)

Design of Experiments

(For Life Sciences R&D Professionals)

Definition

During the set-up of new manufacturing processes, it is important to understand the impact of the variables that are used to achieve optimum performance and to understand the effect on the output of natural variation. Design of Experiments is a methodology for systematically applying statistics to this experimentation. It consists of a series of tests in which purposeful changes are made to the input variables of a product or process so that one may observe and identify the reasons for these changes in the response. DOE provides a quick and cost-effective method to understand and optimize products and processes.

Design of experiment was invented by Ronald A. Fisher in the 1920s and 1930s at Rothamsted Experimental Station (centre of agricultural research in London). Fisher was the central figure and during that time, he was responsible for statistics and data analysis.

The experimental design method was first used in the agricultural sector. Fisher recognized the flaws in an experiment which generated the data have often hampered the analysis of data from agricultural systems. To overcome, he developed the three basic principles of experimental design: randomization, replication and blocking. Then, he systematically introduced statistical thinking and principles for designing experimental investigations. He also introduced the factorial design concept and the analysis of variance.

In Fisher’s first book on the design of experiments, he showed “how valid conclusions could be drawn efficiently from experiments with natural fluctuations such as temperature, soil conditions and rainfall i.e. in the presence of nuisance variables (e.g., batch-to-batch variation)?” He and his colleague Frank Yates developed many of the concepts and procedures that we use today (block design, sample surveys, Yates’s algorithm).

After World War II, W. Edwards Deming taught statistical methods, including DOE, to Japanese for the redevelopment of a country. Later Genichi Taguchi (most well-known Japanese scientist), applied DOE in Toyota for quality improvement. Since then, it has been applied successfully in various industries and scenarios.

By experimental design, we mean a plan used to collect the data relevant to the problem under evaluation in such a way as to provide a basis for valid and objective inference about the stated problem. It helps to make a good decision related to a process or output. The plan usually consists of the selection of treatments whose effects are to be examined, the specification of the experimental layouts and the assignment of treatments to the experimental units & the collection of observations for analysis.

Suppose in a manufacturing process it can be seen that there are input variables (raw material) which change into the final product. The product has various quality characteristics which depend on the functioning of the process. The manufacturing process and the result of the production are affected by various effects which are the so-called factors. The factors determine the quality of the product. There are controllable factors which could be set precisely during the manufacturing process, such as cutting speed, depth of the cut. There are noise factors, which could affect the manufacturing process such as environmental conditions, temperature fluctuation, changes in the raw material.

One of the main objectives of designing an experiment is “how to verify the hypothesis in an efficient and economical way?” There are also many applications of designed experiments in a nonmanufacturing or non-product-development setting, such as marketing, service operations, and general business operations.

- Experiment – An experiment is a procedure which is done to make a discovery, test a hypothesis, to understand cause – and – effect relationships.

- Experimental unit – While conducting an experiment, the experimental material is divided into smaller parts and each part is referred to as experimental unit. It is that unit to which a treatment is applied. Examples are manufactured item, plot of land, etc.

- Factors (input variables) – A factor is a discrete variable used to classify experimental units. For e.g. temperature, machines, etc.

- Levels – It is a value of a factor or we can say that it is a different category within each factor. For e.g. two values of temperature, pressure or time and so on.

- Treatment – Each factor has two or more levels, i.e., different values of the factor. Combinations of factor levels are called treatments. In a process, the various factor levels that describe how a process is to be carried out are called treatments. For e.g. in a chemical manufacturing process, a pH level of 3 and a temperature level of 37° Celsius describe a treatment.

- Experimental error – It describes the failure of two identically treated experimental units to give identical results. For e.g. mistakes in data entry, systematic error or mistakes in the design of the experiment itself or random error, caused by environmental conditions or other unpredictable factors.

- Hypothesis testing – It is a statistical method that is used in making statistical decisions using experimental data. It is basically an assumption that we make about the population parameter.

- Randomization – It is a process in which each and every experimental unit has the same chance of being allocated to treatments. So we can avoid any bias in a process. It is an essential safeguard against distortion of process results by influences such as rising temperature, drifting, calibration of instruments, etc.

- Replicate – We perform the experiment more than once, i.e., we repeat the basic experiment. An individual repetition is called a replicate. The number of replicates is the number of experimental units in a treatment.

- Replication – It is the process to replicate the treatments on different experimental units. To obtain a valid and more reliable estimate we go for replication. Hence, it increases the precision in a process result.

- Local Control – It is a process of minimizing the experimental error. After randomization and replication, all extraneous sources of variation are not removed and we need to choose a design in such a manner that all extraneous sources of variation are brought under control. For this purpose, we make use of local control.

- Blocking – It is a method for increasing precision by removing any unwanted variations in the input or in process. For implementing it, a data is to divide, or partition into groups called blocks in such a way that the observations in each block are homogeneous.

- Precision – It refers to how close measurements are to each other. In other words, the closeness to one another of a set of separated measurements of a quantity.

- Accuracy – It refers to a closeness of the absolute or true value to the measured item or to a target.

- Residual – It is a measure of error in a process model. It is a difference between process output (response) and predicted model values.

1. Single factor designs

- Completely Randomized Design (CRD) – Here, the treatments are randomly assigned to the experimental units so that each experimental unit has the same chance of receiving any one treatment. Any difference among experimental units receiving the same treatment is considered as an experimental error. It is considered to be most useful in situations where the experimental units are homogeneous and the experiments are small. There is only one primary factor under consideration.

For e.g. a textile mill has a large number of looms. Each loom is supposed to provide the same output of cloth per minute. To investigate this assumption, five looms are chosen at random, and their output is measured at different times.

- Randomized Block Design (RBD) – It is one of the most widely used experimental designs in the industry. If large numbers of treatments are to be compared, then large numbers of experimental units are required. This will increase the variation among the responses and CRD may not be appropriate to use. In a randomized block design, there is only one primary factor under consideration in the experiment. Similar test subjects are grouped into blocks. Each block is tested against all treatment levels of the primary factor at random order. This is intended to eliminate possible influence by other extraneous factors.

In real life scenarios, there is not always one factor but there are several factors which we are interested in and want to examine for the better optimization of a process. And even to get the robust design.

2. Multi Factors designs

- Factorial designs – In CRD or RBD, we were primarily concerned with the comparison and estimation of the effects of a single set of treatments like varieties of tablets, methods of manufacturing ceramics, etc. It deals with only one factor. In a factorial design, there is more than one factor and test subjects are assigned to treatment levels of every factor combinations at random order. Let’s discuss the two basic types of design – and factorial designs.

For example, suppose an engineer wishes to study the total power used by each of two different motors (say A & B) running at every two different speeds (say 1000 & 2000 RPM). This experiment would consist of four experimental units: motor A at 1000 RPM, motor B at 1000 RPM, motor A at 2000 RPM and motor B at 2000 RPM. (2^2 Factorial design)

We can use general full factorial designs when we have factors with more than two levels and so forth.

We can use general full factorial designs when we have factors with more than two levels and so forth.

- Screening designs – It is a type of experiment used to identify the potential factors which affects the process or product development. In manufacturing processes, there are several factors in a process and this design helps to identify the major factors which hamper the process. After screening is done, we can go for optimization and process robustness.

Some of the designs used for screening are:-

- Definitive screening design – It can contain up to 3 levels per continuous factors (till 48 factors).

- Plackett-Burman design – It can contain up to 2 levels per continuous factors (till 47 factors).

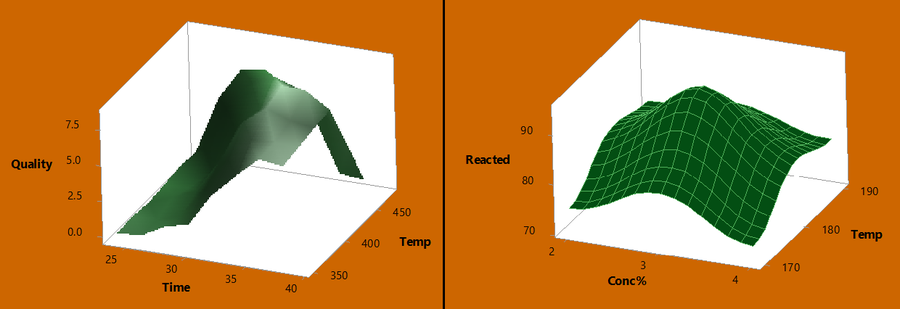

- Response surface designs – It is an advanced level of design of experiments techniques for better understanding the process and to optimize the response. Basically, we use response surface designs after conducting factorial experiment and have identified the major factors in a process. The objective is to determine the optimum operating conditions for process.

There are two types of it.

- Central composite design – Useful in sequential experiments because we can often build on previous factorial experiments by adding axial and center points.

- Box-Behnken design – It does not contain an embedded factorial or fractional factorial design and ensures that all factors are not set at their high levels at the same time.

- Taguchi design (Robust parameter design) – It is a designed experiment that lets us choose a product or process that functions more consistently in the operating environment. It focuses on choosing the levels of controllable factors (or parameters) in a process or a product to achieve

- Mean of the output response is at desired level or target specifications.

- Variability around this target value is as small as possible.

A process designed with this goal will produce more consistent output. A product designed with this goal will deliver more consistent performance regardless of the environment in which it is used.

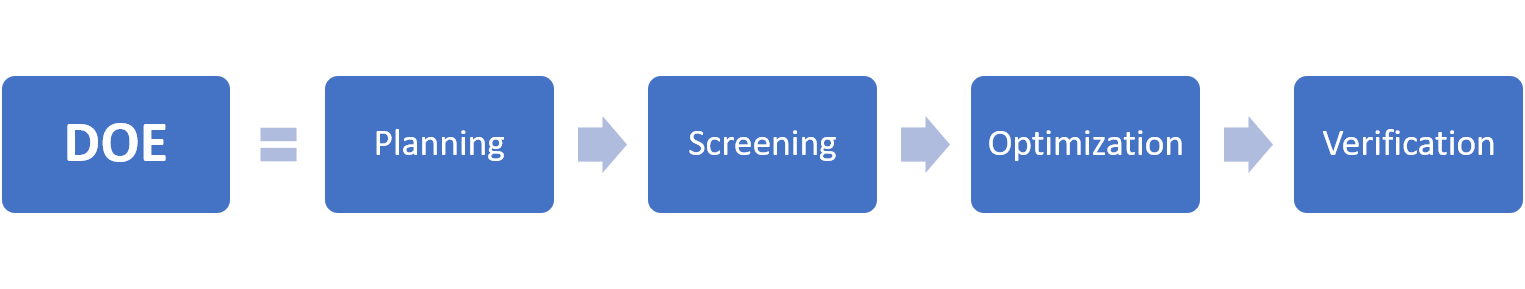

- Planning – It is an important phase while designing and analyzing an experiment. Based on your problem, plan an experiment and so on. Some are

- Set an objective or a goal.

- Evolve an experimental plan that provides meaningful insights.

- Ensure that the process is in control.

- And the measurement system is acceptable.

- Screening – In manufacturing process scenarios, there will be many factors in an experimental process. But to find out the important factors which hamper the process is difficult task to do. So to overcome, we move to screening phase to identify the important factors which affect our product quality. It is extremely important when working with new systems or technologies so that the valuable resources will not be wasted.

- Optimization – After completion of screening phase, we identify few factors which affect our process and now we have to optimize the output factors. To do so, we do optimization i.e. find the settings or levels of the important factors that result in desirable values of the response. Hence we can reduce variation in a process. An optimization phase is usually a follow-up to a screening.

- Verification – Here, we can run a process by using optimal values and so we can eliminate the factors which were hampering our process. In other words, we perform an experiment at the resulted optimal conditions to confirm the optimization results.

- Screening many factors (Selecting the key factors affecting a response).

- Reduced variability and closer conformance to nominal or target requirements.

- Optimization of a process.

- Designing robust products.

- Hence, reduces in overall costs and saves time.

- Thus, establishing and maintaining quality control.

By implementing the design of the experiment correctly, we can accomplish many important tasks in manufacturing or non – manufacturing processes with related to quality control, optimization of a process, designing robust designs and henceforth. But there are some challenges which we face while implementing DOE in our process.

Some of them are listed below.

- Lack of training – Management committee should provide efficient training to their employees who will work on the design of the experiment. So they can understand DOE framework and able to perform well. Otherwise, MSA error (say) can occur and we will make a wrongful decision relate to our process.

- Measurement system analysis (MSA) – MSA helps us to collect the data precisely and accurately by detecting the amount of variation exists within a measurement system. It helps us to detect the amount of variation exists within a measurement system. It is a key aspect and should be clearly defined before performing DOE. Otherwise, it leads to nowhere and our experimental process will be misleading.

- Limited Resources – While implementing DOE in any process, resources should be made available and so we can implement DOE successively. Suppose, good measurement gauges should be available and software packages should be installed for analyzing DOE data.

- Management support – Management committee should encourage all the employees in all the levels. They should give time to implement DOE in a proper way and never be impatient about it. They should never carry away with the time and cost it took to implement it. Instead, they should wait patiently for the outcome.

- Difficult to overcome from old customs – Even they have properly trained their employees on DOE, it happens that they follow up their previous culture and make it difficult to implement DOE successively. As we say ‘old habits never fade away’. To overcome, enormous support and guidance are required.

Let us first have a glimpse of each topic and will proceed on further discussion.

Design of experiment is a structured way of conducting a test to evaluate the factors which are affecting our response variable. It plays an important role in process and product realization activities which consist of new product design and formulation, manufacturing process development and process improvement.

Statistical process control is a statistical method used to monitor, control and improve processes by eliminating variation from a process. It is a decision-making tool and widely used in almost all manufacturing processes for achieving process stability to continuous improvements in product quality.

Now we can conclude that SPC tries to reduce process variation by elimination the causes. We can use SPC charts and see the overview of a process. By plotting Pareto chart we will know the most frequently occurring problem. DOE is used to improve the process by doing experimentation & analysis.

Further Explanation – After implementing SPC techniques, we were able to find the variation present in a process, process capability and henceforth. Then by implementing DOE on this process, we can make robust process and improve process efficiency and productivity.

Design of experiment methods are applicable to various types of methodologies.

Design of experiment methods are applicable to various types of methodologies.

Some of them are discussed below.

- DMAIC – It is a well-known Six Sigma methodology and focused on improving the process. DMAIC stands for Define Measure Analyze Improve and Control. During the Improve phase, DOE is widely used and recognized.

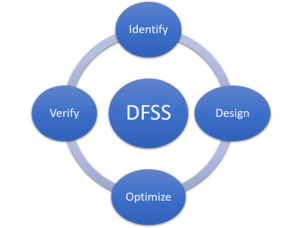

- DFSS – Design for Six Sigma (DFSS) is a business-oriented method for product design and process performance at six sigma quality levels (New product development). Traditional six sigma project use DMAIC. While DFSS use IDOV (one of the methodology) and stands for Identify Design Optimize and Verify. During the Optimize phase, DOE is applied for process optimization and to make a robust design.

To enhance success in six – sigma and lean six sigma projects, DOE has to be applied correctly. Apart from these scenarios, we can apply in R&D, engineering, quality control and so on.

Design of Experiments is a technique for systematically applying statistics to an experimentation process. It consists of a series of tests in which changes are made to the input variables of a product or process. Then we observe and identify the reasons for these changes in the response variable. It provides a quick and cost-effective method to understand – optimize products and processes.

DOE was initially used in an agricultural sector for crop productivity. As it evolved by it was used in discrete manufacturing (Automobiles, Defense, Aerospace, etc.) and process manufacturing (Glass, Pharmaceutical, Beverage, etc.) industries. Now we can see its successful implementations in various manufacturing industries and has achieved continuous improvements in process quality.

During recent years, DOE (along with SPC) has been implemented in various service sectors (Healthcare, financial institutions, call centres, etc.). Service industry plays an important aspect of our life. They offer services which are needful to us – like hospitals, airlines, banks, etc. For e.g. we often travel to various destinations for official work on holidays by air and stay in a hotel. When we travel by particular airline and didn’t get the essential services – Will we travel again from that airline? Similarly, when we stay at a particular hotel and didn’t get the required services – Will we stay again at that hotel? Our answer will be no, never. Hence the customers’ response is supreme. By implementing DOE, we can achieve a good balance between the quality and cost. Thus helps to improve service quality and performance. And also excellent service quality is noted as a major factor to make a profit in the service sector.

Some of the examples are:-

- Healthcare – While implementing DOE we can improve patient care by reducing waiting time, and monitoring clinical trials, operational performance and so on.

- Banking – While implementing DOE we can improve customer service by reducing waiting time, % errors in the customer profile, etc.

- Customer service – While implementing DOE we can improve customer service by reducing the call waiting time, monitoring the response calls, etc.

Attend our Training Program, to know more about Statistics and Statistical Software. We conduct various training programs – Statistical Training and Minitab Software Training. Some of the Statistical training certified courses are Predictive Analytics Masterclass, Essential Statistics For Business Analytics, SPC Masterclass, DOE Masterclass, etc. (Basic to Advanced Level). Some of the Minitab software training certified courses are Minitab Essentials, Statistical Tools for Pharmaceuticals, Statistical Quality Analysis & Factorial Designs, etc. (Basic to Advanced Level).

We also provide a wide range of Business Analytics Solutions and Business Consulting Services for Organisations to make data-driven decisions and thus enhance their decision support systems.